Fine-tune an open source LLM from Postgres data in 5 minutes

The process of fine-tuning open source LLMs has become incredibly easy, we'll walk you through it

Six months ago, fine-tuning an open source LLM was hard, and required a number of Python scripts and hardware, it’s now incredibly easy!

We’ll walk you through a simple example of fine-tuning an LLM for a product catalog database in Postgres, useful for a few reasons:

Ask questions about the products in the product catalog

Use the LLM for creative jobs (tweets, ads, etc)

Customer support

Create a Together.ai account

We’ll use Together.ai’s fine-tuning API, which works across a variety of different open source LLMs, and is very easy to use.

Sign up and retrieve you API key, we’ll use this API key in our tutorial.

Output our product catalog from Postgres to JSONL format

We’ll use a typescript file to make this process easy. The result is a JSONL file we can use for fine-tuning. Here is the script we used to move data from Postgres → JSONL

Specify our Postgres database query

Format the results as JSONL, one line per product attribute, using the following structure each JSON line in the document:

{ "text" : "<s>[INST] <<SYS>>Answer the following question<</SYS>> What is the origin of the word JSON?[/INST] It's when Jason misspells his name</s>" }Fine tuning script:

async function createFineTuningJSONLFile() {

const URL = `postgres://${process.env.PGUSER}:${process.env.PGPASSWORD}@${process.env.PGHOST}/${process.env.PGDATABASE}?sslmode=require`;

const sql = postgres(URL, { ssl: 'require' });

const results = await sql`

select

"product id" as productid,

"pageurl" as url,

"product name" as product_name,

"list price" as list_price,

"sale price" as sale_price,

"discount percentage" as discount_percentage,

"product description" as description

from products limit 1000

`;

const prompt_instruction = "Answer the following question"

var jsonl_file = '';

if (results && results.length > 0) {

for (let i=0; i<results.length; i++) {

let row = results[i];

const jsonl = `{ "text" : "<s>[INST] <<SYS>${prompt_instruction}<</SYS>> What is the URL and webpage for product id ${row.productid}? [/INST] Product id ${row.productid} url and webpage is located at ${row.url}</s>" }

{ "text" : "<s>[INST] <<SYS>${prompt_instruction}<</SYS>> What is the list price for product id ${row.productid}? [/INST] Product id ${row.productid} list price is $${row.list_price}</s>" }

{ "text" : "<s>[INST] <<SYS>${prompt_instruction}<</SYS>> What is the sale price for product id ${row.productid}? [/INST] Product id ${row.productid} sale price is $${row.sale_price}</s>" }

{ "text" : "<s>[INST] <<SYS>${prompt_instruction}<</SYS>> What is the discount percentage for product id ${row.productid}? [/INST] Product id ${row.productid} discount percentage is ${row.discount_percentage}%" }

{ "text" : "<s>[INST] <<SYS>${prompt_instruction}<</SYS>> What is the description for product id ${row.productid}? [/INST] Product id ${row.productid} description is ${row.description}</s>" }`;

console.log(jsonl);

jsonl_file += jsonl + "\n";

}

}

writeFileSync("products.jsonl", jsonl_file, { flag: "w" })

}The result JSONL can be found here for our sample product catalog database.

Use the JSONL file for fine-tuning

Now that we have our JSONL file, formatted correctly for fine-tuning, and containing products from our product catalog, let’s start the fine-tuning.

Install the Together fine-tuning command line tool

pip install --upgrade togetherExport your API key

export TOGETHER_API_KEY=<APIKEY1234>Upload your JSONL fine-tuning file (make sure to save the file id from the result above, sample JSONL file here)

together files upload ./products.jsonlStart a fine-tuning job

together finetune create --training-file <FILE_ID_FROM_PREVIOUS_STEP> --model togethercomputer/llama-2-7b-chatWe’ll use the llama-2-7b-chat model for our fine-tuning job, however, there are a lot of open source options to choose from.

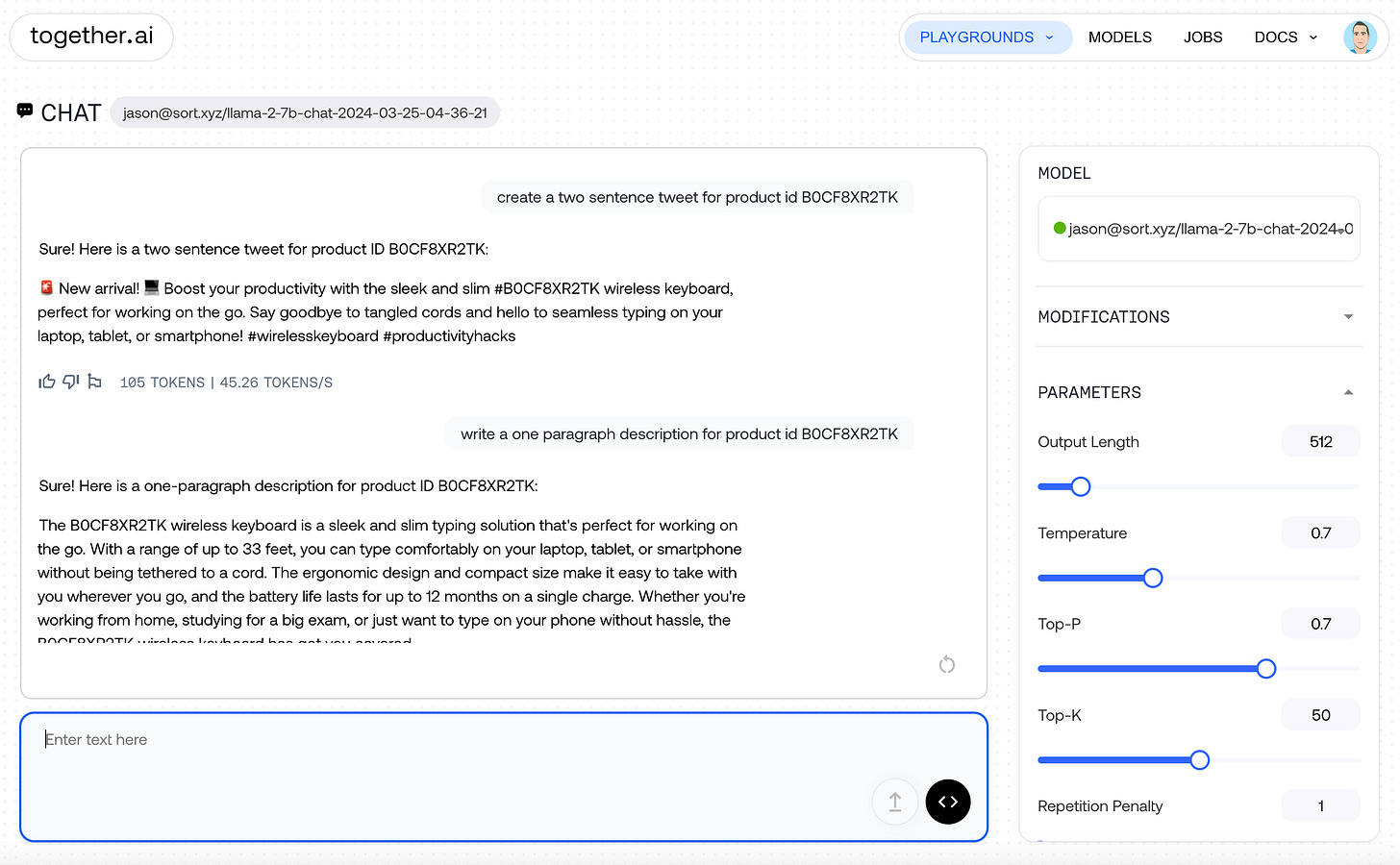

Test our new chat bot

We can use the Together.ai interface for chatting with our fine-tuned LLM after the fine-tuning job has completed. Ensure you select the correct model for the chat window.

Incremental fine-tuning and Sort

Sort is a database collaboration platform, that makes it easy to collaborate on database changes as a group, to ensure better data quality as a result.

Incremental fine-tuning is very early, however, we see it as a potential step in the collaboration workflow of a database (similar to CI in Github).

Fine-tuning LLMs will come up for a lot of use cases, including:

Product catalogs

Add thumbs up / thumbs down to LLM responses and fine-tune based on users reactions

Chatbot personalities

Etc.

Get started with Sort!

The best way to get started with Sort is by browsing a sample database, or signing up and adding a small database to become familiar with Sort workflows. As always, please reach out to us directly with any questions: info@sort.xyz